Research

Recognition

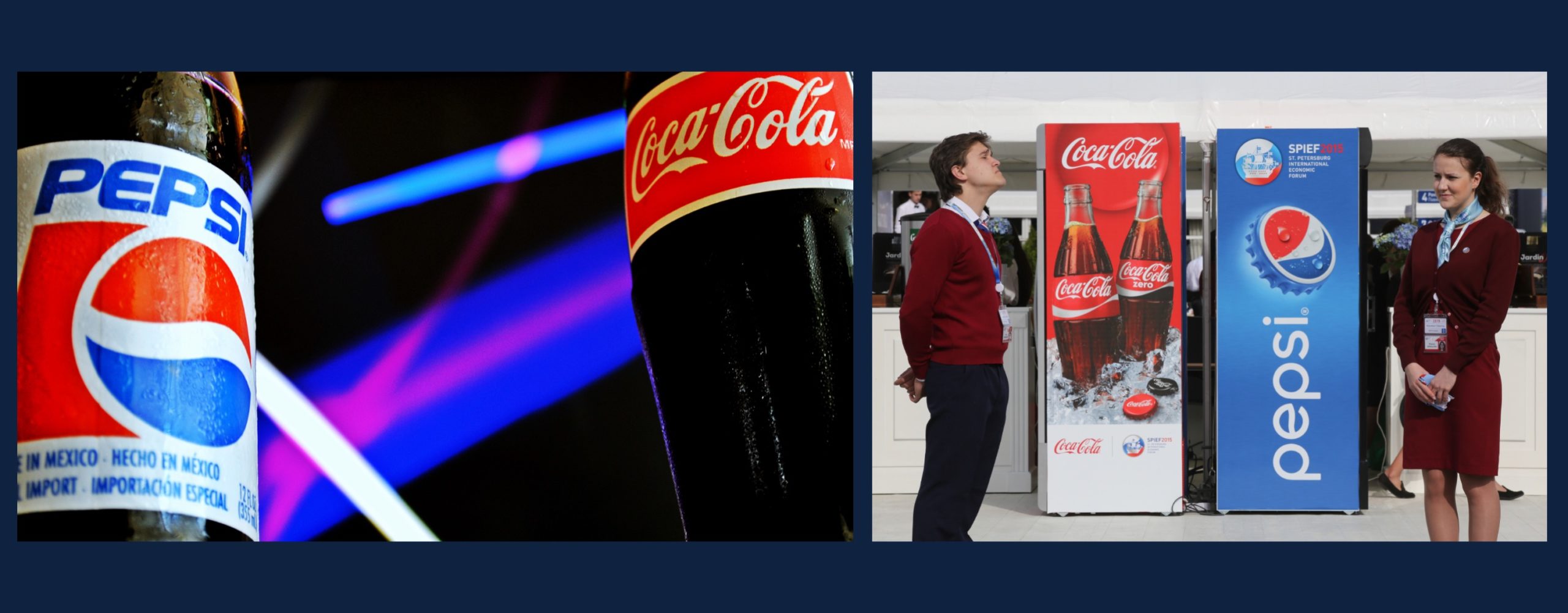

A system that tackles video, speech, object, cognitive and context recognition, all at once. Given an image, say of Coca-Cola or of Pepsi brand, and an audio caption, the fizz of its bottle, the model is expected to highlight in real-time the relevant cognitive and contexts of the regions of the image being described.

Unlike current language technologies, the model doesn’t require manual transcriptions and annotations of the examples it’s trained on. Instead, it learns words directly from recorded speech clips and objects in raw images, and associates them with one another. However, this requires transcriptions of many thousands of hours of speech recordings. Using feedback data, the systems learn to map speech signals with specific words. Such an approach becomes especially problematic when, say, new terms enter our lexicon, and the systems must be retrained.

We combined speech-object recognition technique save countless hours of manual labor and open new doors in speech and image recognition. We got the idea of training a model in a manner similar to walking a child through the world and narrating what you’re seeing.

Reward-Objective Inference

We compiled thousands of images, videos and audio captions, of only Coca-Cola and Pepsi, in hundreds of different context categories, such as beaches, shopping malls, city streets, summer time, winter time, Christmas time, games, sports, friends food, indoor, outdoor, etc. bedrooms. The models on images of Coca-Cola with red hair band and blue eyes, wearing a blue and red dress (Pepsi color), Pepsi with a white lighthouse with a red roof in the background, etc.. The model learned to associate which pixels in the image corresponded with the words “Coke” or Coca-Cola,” “Refreshing,” “Pepsi,” “Music,” “Pepsi with Pizza,” and “Coke in red roof.” When an audio caption was narrated, the model then highlighted each of those objects in the image as they were described.

The highest layer of the model computes outputs of the two networks and maps the speech patterns with image data.

We ran the model with caption A and image A, and caption B and image B which are correct. Then, we ran it a random combinations of caption B with image A, and caption A with image B which are incorrect pairings through our cognitive framework. After learning thousands of trade-offs and options, the model learns the speech signals corresponding with image signals, and associates those signals with words in the captions.

Our intent is to design a cross-modal [audio-visual-cognitive-context] alignments that can be inferred automatically by simply teaching the network which images and captions in what context belong together and which preferential attachments don’t.

Our other intent is to minimize latency as well as resources without any loss in quality in projection so that we can use the model in both online and offline contexts as in natural selections.

Multi-modal knowledge representation learning is to eliminate the redundancy between modes by utilizing the complementarity between modes and improve the recognition rate. Our SCANN architecture in cognitive frameworkbased on audio command recognition, video and hand motion recognition, cognitive states and expected states of the target and contexts was configured to process the instructions of each single-mode input, and then detects the correctness of the recognition command through choice and reward functions in monadic and paired-comparison.

Finally, the obtained results are merged and output instructions. The model is to compare the obtained recognition results twice and then to fuse. Firstly, the keywords of video and gesture recognition are retrieved in speech recognition. Secondly, the audio recognition is processed by word segmentation, and then the word segmentation results were compared with the keywords of video and gesture recognition. Finally, the two comparison results were fused to obtain the final command, which improved the accuracy of identification.